Global AI for 5G Challenge (2022)

At Supercom, we collaborate with ITU-T and host a problem statement in the 2022 edition of the ITU Global AI/ML Challenge (https://challenge.aiforgood.itu.int/). The ITU AI Challenge is a competition that gathers professionals and students from all over the world with the aim of solving real-world problems in communications through AI and ML.

[NEWS] Upcoming round-table session, 8 September 2022, 14h (CEST time): Registration here.

[Subscribe to the Challenge's mailing list] Just drop an email to supercom@cttc.es with the subject "Subscribe to challenge's mailing list".

[Webinar 1] Introduction to the problem statement (video+slides): https://aiforgood.itu.int/event/federated-traffic-prediction-for-5g-and-beyond/

1. Introduction

Traffic prediction is one of the key ingredients to fulfill some of the goals of next-generation communications systems and to realize self-adaptability in mobile networks. The vast population of devices connected to base stations, while providing rich data to train Machine Learning (ML) models, can also compromise the efficiency of such models (e.g., temporal responsiveness) due to potential communication bottlenecks. In this regard, Federated Learning (FL) [1] arises as a compelling solution to provide robust ML optimization while maintaining the communication overhead low. In FL, devices exchange model parameters, rather than raw training data, thus also enhancing privacy.

This problem statement proposes the usage of FL tools to predict the traffic in cellular networks collaboratively. To that purpose, we provide an unlabeled dataset that contains data from unknown LTE users of commercial operators at three different specific locations. More details regarding the dataset and "starting-point" ML models can be found at [2].

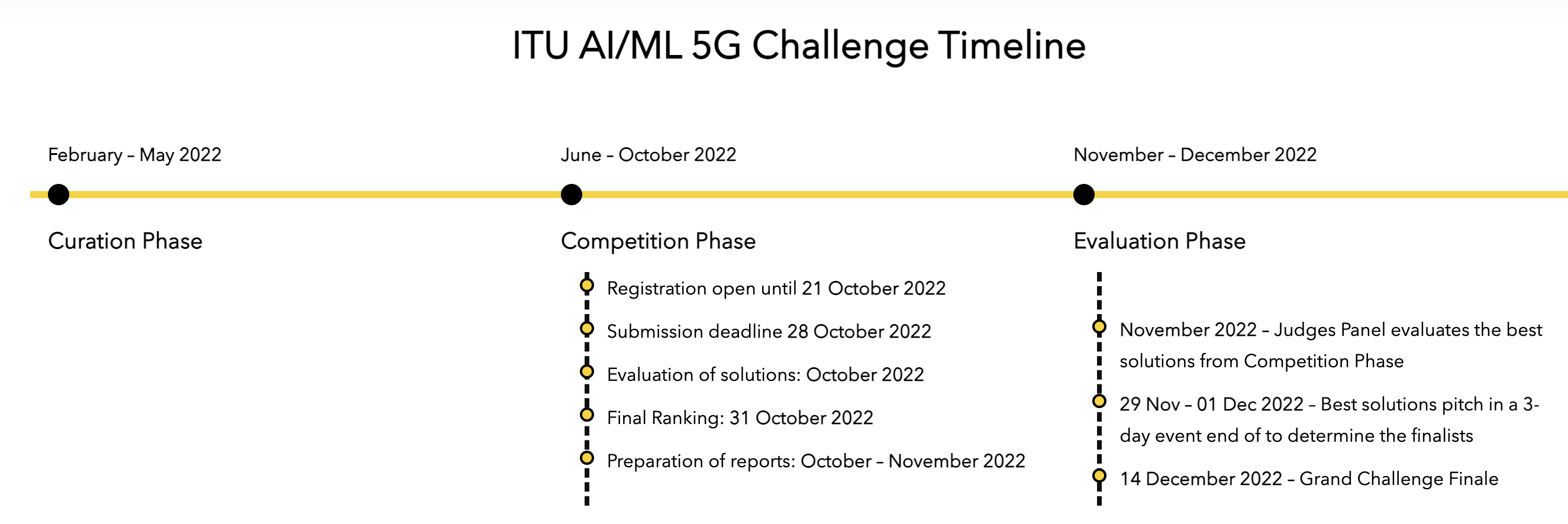

1.1 Timeline

- Registration period (see section 1.2): open until 21 October 2022

- Publication of the training data set: Available now (registration needed: https://challenge.aiforgood.itu.int/)

- Submission deadline: 28 October 2022

- Participants ranking and publication of the test data set: 31 October 2022

1.2 Registration

You can register before at https://challenge.aiforgood.itu.int/

The steps you need to follow are:

-

Participants should register at the official website

-

Choose the problem statement to work on "Federated Traffic Prediction for 5G and Beyond".

-

Choose a team leader who will “create the team [e.g., Supercom]” under the problem statement "Federated Traffic Prediction for 5G and Beyond"

-

Admin will approve the team creation

-

Team members request to join the team [Supercom]

A team leader should approve the request to join the team.

We also encourage participants to join the challenge's mailing list (see details above) and use the official ITU's slack channel: https://join.slack.com/t/itu-challenge/shared_invite/zt-eql00z05-CXelo7_aL0nHGM7xDDvTmA

1.3 Prizes

Among the prizes are 3,000 CHF for 1st prize, 2,000 CHF for 2nd prize

-

1,000 CHF for winner of each problem statement

-

300 CHF, Audience prize: based on a customized poll run by ITU during the Grand Challenge Finale

-

500 CHF, Student award

2. Data set and Resources

2.1 Description of the Dataset

A dataset with real LTE Physical Downlink Control Channel (PDCCH) measurements, obtained using OWL [3] from three different locations, will be provided to train ML models federatively. The dataset is both anonymous, because it is impossible to obtain users’ unique identifiers, and accurate because individual communication can be separated within the dataset for obtaining high-definition traces.

The training dataset is a pickle (.pkl) file that contains the data of each client structured as an OrderedDict. The dataset was obtained by performing measurement at three different BSs in Barcelona, Spain. The size of each site’s data varies and depends on the amount of time measurements were performed. In particular, we find the following sites/locations, which will be considered as separate FL clients:

-

Location 1, 'ElBorn': 5421 samples, collected from 2018-03-28 15:56:00 to 2018-04-04 22:36:00.

-

Location 2, 'LesCorts': 8615 samples, collected from 2019-01-12 17:12:00 to 2019-01-24 16:20:00.

-

Location 3, 'PobleSec': 19909 samples, collected from 2018-02-05 23:40:00 to 2018-03-05 15:16:00.

For each site, the following features (which were averaged every 120 seconds during measurements) are provided:

-

'down' (transport block size in the downlink)

-

'up' (transport block size in the uplink)

-

'rnti_count' (Radio Network Temporary Identifiers count)

-

'mcs_down' (Modulation and Coding Scheme (download))

-

'mcs_down_var' (variance of mcs_down)

-

'mcs_up' (Modulation and Coding Scheme (upload))

-

'mcs_up_var' (variance of mcs_up)

-

'rb_down' (number of allocated resource blocks (download))

-

'rb_down_var' (normalized variance of rb_down)

-

'rb_up' (number of allocated resource blocks (upload))

-

'rb_up_var' (normalized variance of rb_up)

2.2 Federated Learning resources

Participants are encouraged to use TensorFlow Federated (TFF) libraries (available here) or Pytorch, but other FL solutions are accepted (e.g., custom solutions).

To install TensorFlow Federated (TFF), please refer to this website. A useful video tutorial on TFF can be found here.

3. Submission guidelines

- Deliverable 1: the code used to train the solution and the resulting model weights in .h5 format. The model needs to be trained to make one-step predictions in the test data set. See section 4. Evaluation for more details.

- Deliverable 2: a report explaining the proposed FL model, as well as the technical details regarding pre-processing, training, validation, etc. It is suggested to follow this structure when writing the report:

- Introduction: Source code + documentation

- Methodology: method used and motivation, description of the proposed FL solution (model, optimizers, aggregation method, etc.).

- Pre-processing & Training: how data has been prepared to feed the selected FL model, how training is done (e.g., are contexts randomly selected in each iteration?)

- Results

- Encountered issues

- Deliverable 3: A presentation in the grand challenge finale.

The submission of Deliverable 1 and Deliverable 2 will be done via email, to supercom@cttc.es.

4. Evaluation

The evaluation of the submitted solutions will be done in a test dataset.| rnti_count | rb_down | rb_up | down | up | |

| #1 | 4277 | 1.961025e-08 | 0.008343 | 38490328.0 | 18729576.0 |

| #2 | 5201 | 1.987812e-08 | 0.009248 | 39124325.0 | 19131203.0 |

| #3 | 3127 | 1.881235e-08 | 0.008112 | 36651701.0 | 18254260.0 |

| ... | ... | ... | ... | ... |

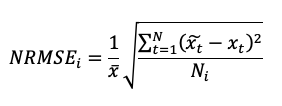

The submitted predictions will be evaluated using the Normalized Root Mean Square Error (NRMSE) metric of 'up' and 'down' features:

5. References

[1] Konečný, J., McMahan, H. B., Yu, F. X., Richtárik, P., Suresh, A. T., & Bacon, D. (2016). Federated learning: Strategies for improving communication efficiency. arXiv preprint arXiv:1610.05492.

[2] Trinh, H. D., Gambin, A. F., Giupponi, L., Rossi, M., & Dini, P. (2020). Mobile traffic classification through physical control channel fingerprinting: a deep learning approach. IEEE Transactions on Network and Service Management, 18(2), 1946-1961. [Open-access version]

5. Contact

Supercom (supercom@cttc.es)

Paolo Dini (paolo.dini@cttc.es)

Marco Miozzo (marco.miozzo@cttc.cat)

ITU AI Challenge committee (ai5gchallenge@itu.int)